M <- diag(I) / INotes on Multiple Factor Analysis

The notes contain all step-by-step calculations, tables and graphics on multiple factors analysis as found in the Abdi & Valentin (2007) chapter done in R.

Preparing the raw data

Table 1 from Abdi & Valentin (2007), p.4

| oaktype | fruity1 | woody1 | coffee1 | redfruit2 | roasted2 | vanillin2 | woody2 | fruity3 | butter3 | woody3 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| expert | NA | 1 | 1 | 1 | 2 | 2 | 2 | 2 | 3 | 3 | 3 |

| wine 1 | 1 | 1 | 6 | 7 | 2 | 5 | 7 | 6 | 3 | 6 | 7 |

| wine 2 | 2 | 5 | 3 | 2 | 4 | 4 | 4 | 2 | 4 | 4 | 3 |

| wine 3 | 2 | 6 | 1 | 1 | 5 | 2 | 1 | 1 | 7 | 1 | 1 |

| wine 4 | 2 | 7 | 1 | 2 | 7 | 2 | 1 | 2 | 2 | 2 | 2 |

| wine 5 | 1 | 2 | 5 | 4 | 3 | 5 | 6 | 5 | 2 | 6 | 6 |

| wine 6 | 1 | 3 | 4 | 4 | 3 | 5 | 4 | 5 | 1 | 7 | 5 |

Three studies (i.e. groups of variables) normalized by column.

\[ X_{1}= \begin{pmatrix} -0.57 & 0.58 & 0.76 \\ 0.19 & -0.07 & -0.28 \\ 0.38 & -0.51 & -0.48 \\ 0.57 & -0.51 & -0.28 \\ -0.38 & 0.36 & 0.14 \\ -0.19 & 0.14 & 0.14 \\ \end{pmatrix} \]

\[ X_{2}= \begin{pmatrix} -0.50 & 0.35 & 0.57 & 0.54 \\ 0.00 & 0.05 & 0.03 & -0.32 \\ 0.25 & -0.56 & -0.51 & -0.54 \\ 0.75 & -0.56 & -0.51 & -0.32 \\ -0.25 & 0.35 & 0.39 & 0.32 \\ -0.25 & 0.35 & 0.03 & 0.32 \\ \end{pmatrix} \]

\[ X_{3}= \begin{pmatrix} -0.03 & 0.31 & 0.57 \\ 0.17 & -0.06 & -0.19 \\ 0.80 & -0.62 & -0.57 \\ -0.24 & -0.43 & -0.38 \\ -0.24 & 0.31 & 0.38 \\ -0.45 & 0.49 & 0.19 \\ \end{pmatrix} \]

Finding the global space

Assign a mass to each observation, i.e. each observation gets a weight (row weights). Here all objects get the same weight.

\[ \mathbf{M} = \begin{pmatrix} 0.17 & 0.00 & 0.00 & 0.00 & 0.00 & 0.00 \\ 0.00 & 0.17 & 0.00 & 0.00 & 0.00 & 0.00 \\ 0.00 & 0.00 & 0.17 & 0.00 & 0.00 & 0.00 \\ 0.00 & 0.00 & 0.00 & 0.17 & 0.00 & 0.00 \\ 0.00 & 0.00 & 0.00 & 0.00 & 0.17 & 0.00 \\ 0.00 & 0.00 & 0.00 & 0.00 & 0.00 & 0.17 \\ \end{pmatrix} \]

Divide each matrix by root of first values to standardize variance. The root of the first eigenvalue of \(X_1\) is \(\varphi_1= 1.69\).

standardize_matrix <- function(x) x / svd(x)$d[1]

Z1 <- standardize_matrix(X1)

Z2 <- standardize_matrix(X2)

Z3 <- standardize_matrix(X3)\[ \mathbf{Z_1} = \varphi_1^{-1} \; \mathbf{X_1} = \begin{pmatrix} -0.34 & 0.34 & 0.45 \\ 0.11 & -0.04 & -0.16 \\ 0.22 & -0.30 & -0.29 \\ 0.34 & -0.30 & -0.16 \\ -0.22 & 0.21 & 0.08 \\ -0.11 & 0.09 & 0.08 \\ \end{pmatrix} \]

Building the global matrix

Z <- cbind(Z1, Z2, Z3)\[ \mathbf{Z} = (\mathbf{Z_1} \; \mathbf{Z_2} \; \mathbf{Z_3}) = \\ \begin{pmatrix} -0.34 & 0.34 & 0.45 & -0.26 & 0.19 & 0.30 & 0.28 & -0.02 & 0.20 & 0.36 \\ 0.11 & -0.04 & -0.16 & 0.00 & 0.03 & 0.02 & -0.17 & 0.11 & -0.04 & -0.12 \\ 0.22 & -0.30 & -0.29 & 0.13 & -0.29 & -0.27 & -0.28 & 0.51 & -0.39 & -0.36 \\ 0.34 & -0.30 & -0.16 & 0.39 & -0.29 & -0.27 & -0.17 & -0.16 & -0.27 & -0.24 \\ -0.22 & 0.21 & 0.08 & -0.13 & 0.19 & 0.20 & 0.17 & -0.16 & 0.20 & 0.24 \\ -0.11 & 0.09 & 0.08 & -0.13 & 0.19 & 0.02 & 0.17 & -0.29 & 0.31 & 0.12 \\ \end{pmatrix} \]

Computing the global PCA

The singular value decomposition of \(\mathbf{Z} = \mathbf{U \Delta V^T}\) yields

dec <- svd(Z)

U <- dec$u # or as eigendecomposition eigen(Z %*% t(Z))

d <- dec$d

D <- diag(d)

V <- dec$v\[ \mathbf{U} = \begin{pmatrix} 0.53 & -0.35 & 0.58 & 0.04 & -0.31 & 0.41 \\ -0.14 & -0.13 & -0.49 & -0.51 & -0.54 & 0.41 \\ -0.56 & -0.57 & -0.01 & 0.36 & 0.25 & 0.41 \\ -0.44 & 0.62 & 0.48 & -0.15 & -0.03 & 0.41 \\ 0.34 & 0.04 & -0.16 & -0.39 & 0.73 & 0.41 \\ 0.27 & 0.39 & -0.40 & 0.65 & -0.11 & 0.41 \\ \end{pmatrix} \]

and

\[ diag \{ \mathbf{\Delta} \} = \begin{pmatrix} 1.68 & 0.60 & 0.34 & 0.18 & 0.11 & 0.00 \\ \end{pmatrix} \]

and squaring gives the eigenvalues of the PCA

\[ diag \{ \mathbf{\Delta^2} \} = \begin{pmatrix} 2.83 & 0.36 & 0.12 & 0.03 & 0.01 & 0.00 \\ \end{pmatrix} \]

and

\[ \mathbf{V} = \begin{pmatrix} -0.34 & 0.22 & -0.03 & -0.14 & -0.55 & 0.22 \\ 0.35 & -0.14 & 0.03 & -0.30 & -0.02 & 0.38 \\ 0.32 & -0.06 & 0.65 & 0.24 & -0.60 & -0.10 \\ -0.28 & 0.34 & 0.32 & -0.31 & 0.18 & 0.18 \\ 0.30 & -0.00 & -0.43 & -0.11 & -0.19 & -0.54 \\ 0.30 & -0.18 & 0.00 & -0.67 & -0.11 & -0.11 \\ 0.30 & 0.09 & 0.22 & 0.36 & 0.38 & -0.13 \\ -0.22 & -0.86 & -0.01 & 0.12 & 0.00 & 0.22 \\ 0.36 & 0.20 & -0.45 & 0.30 & -0.19 & 0.56 \\ 0.37 & 0.01 & 0.21 & -0.18 & 0.28 & 0.28 \\ \end{pmatrix} \]

The factor score in conventional PCA would be \(\mathbf{S} = \mathbf{U \Delta}\). here however, the masses are used to weight the rows leading to

S = diag(diag(M)^(-1/2)) %*% U %*% D

colnames(S) <- paste0("GPC", 1:length(d)) # names global PC

S <- S[, -6] # kill zero column\[ \mathbf{S} = \mathbf{M^{-\frac{1}{2}}U \Delta} = \begin{pmatrix} 2.17 & -0.51 & 0.48 & 0.02 & -0.08 \\ -0.56 & -0.20 & -0.41 & -0.23 & -0.15 \\ -2.32 & -0.83 & -0.01 & 0.16 & 0.07 \\ -1.83 & 0.91 & 0.40 & -0.07 & -0.01 \\ 1.40 & 0.05 & -0.13 & -0.18 & 0.20 \\ 1.13 & 0.58 & -0.34 & 0.29 & -0.03 \\ \end{pmatrix} \]

The first PC contributes \(84.5 \%\) to the total inertia. The following table shows all contributions in percent.

con <- round(d^2 / sum(d^2), 5)

k <- data.frame(PC=seq_along(d), contribution=percent(con))| PC | contribution |

|---|---|

| 1 | 84.54% |

| 2 | 10.64% |

| 3 | 3.44% |

| 4 | 0.99% |

| 5 | 0.38% |

| 6 | 0.00% |

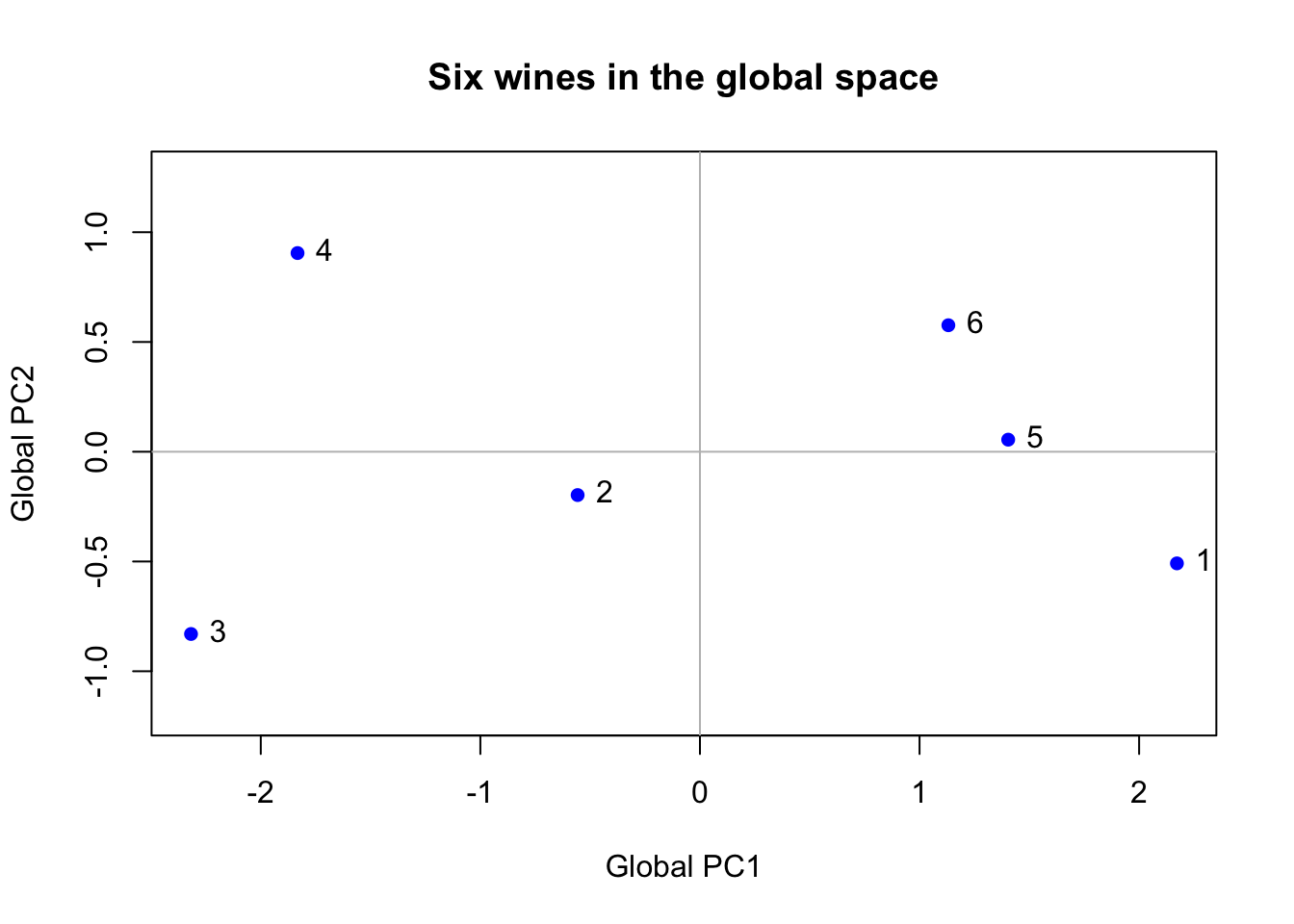

A plot of the six wines in the global PCA space.

plot(S, xlab = "Global PC1", ylab = "Global PC2", pch=16, col="blue",

asp=1, cex=1, main="Six wines in the global space")

abline(h=0, v=0, col="grey")

text(S, labels=1:6, pos=4)

Partial analysis

It can be shown that

\[\mathbf{S}=\mathbf{M^{ -\frac{1}{2} }U\Delta}\]

can be rewritten as

\[\mathbf{S}= (\mathbf{ZZ^T})(\mathbf{M^{ -\frac{1}{2} }U\Delta^{-1}})\]

This shows that the matrix \(\mathbf{P}=(\mathbf{M^{ -\frac{1}{2} }U\Delta^{-1}})\) is a projection matrix 1, that takes the crossproduct of \(\mathbf{ZZ^T}\) into the global component space.

P = diag(diag(M)^(-1/2)) %*% U %*% diag(diag(D)^-1)[,-6] # projection matrix, kill zero column\[ \mathbf{P} = \begin{pmatrix} 0.77 & -1.43 & 4.20 & 0.55 & -6.68 \\ -0.20 & -0.55 & -3.56 & -6.90 & -11.71 \\ -0.82 & -2.33 & -0.05 & 4.85 & 5.53 \\ -0.65 & 2.54 & 3.46 & -2.01 & -0.66 \\ 0.50 & 0.15 & -1.13 & -5.27 & 15.93 \\ 0.40 & 1.61 & -2.91 & 8.78 & -2.43 \\ \end{pmatrix} \]

The projection matrix is used to project the crossproduct of the single persons into the global compontent space using the formula.

\[\mathbf{S_1}= N \; (\mathbf{Z_1 Z_1^T})(\mathbf{P})\]

Note the additional \(e\) which is a scaling factor accounting for the number of studies, i.e. here \(N=3\).

N <- 3

S1 = N * (Z1 %*% t(Z1)) %*% P

S2 = N * (Z2 %*% t(Z2)) %*% P

S3 = N * (Z3 %*% t(Z3)) %*% P

colnames(S1) <- colnames(S2) <- colnames(S3) <- paste0("PC", 1:5)\[ \mathbf{S1} = \begin{pmatrix} 2.76 & -1.10 & 2.29 & 0.40 & -0.67 \\ -0.77 & 0.30 & -0.81 & -0.31 & 0.27 \\ -1.99 & 0.81 & -1.48 & -0.08 & 0.39 \\ -1.98 & 0.93 & -0.92 & 0.02 & -0.59 \\ 1.29 & -0.62 & 0.49 & -0.10 & 0.51 \\ 0.69 & -0.31 & 0.43 & 0.07 & 0.08 \\ \end{pmatrix} \]

\[ \mathbf{S2} = \begin{pmatrix} 2.21 & -0.86 & -0.74 & -0.27 & -0.06 \\ -0.28 & -0.13 & -0.35 & -0.55 & -0.52 \\ -2.11 & 0.50 & 0.77 & 0.49 & 0.01 \\ -2.39 & 1.23 & 1.57 & 0.20 & 0.68 \\ 1.49 & -0.49 & -0.62 & -0.40 & -0.13 \\ 1.08 & -0.24 & -0.63 & 0.53 & 0.03 \\ \end{pmatrix} \]

\[ \mathbf{S3} = \begin{pmatrix} 1.54 & 0.44 & -0.10 & -0.07 & 0.47 \\ -0.61 & -0.76 & -0.07 & 0.17 & -0.19 \\ -2.85 & -3.80 & 0.69 & 0.07 & -0.19 \\ -1.12 & 0.56 & 0.55 & -0.42 & -0.11 \\ 1.43 & 1.27 & -0.26 & -0.03 & 0.22 \\ 1.62 & 2.28 & -0.82 & 0.28 & -0.20 \\ \end{pmatrix} \]

The figure below shows the expert positions projected into the global space.

S.all <- rbind(S, S1, S2, S3)

plot(S.all, xlab = "Global PC1", ylab = "Global PC2", type="n",

asp=1, main="Experts projected into the global space")

abline(h=0, v=0, col="grey")

points(S, pch=16, col="blue", cex=1.5)

text(S, labels=1:6, pos=4)

ss <- list(S1, S2, S3)

for (i in seq_along(ss)) {

s <- ss[[i]]

points(s, pch=18, col=i)

for (j in 1:6)

segments(S[j,1], S[j,2], s[j,1], s[j,2], col=i)

}

The original variables and the global analysis

The loadings of the variables on the component equals the correlation between the gloabl factor score and the original or column normalized variable scores (as one is just a linear transformation the other, the correlations will be identical).

R.all = cor(cbind(S[, 1:3], X1, X2, X3)) # calc all loadings

L = t(R.all[4:13, 1:3]) # variable loadings on first 3 GPCs

# or a bit simpler to calculate using matrix multiplication

# (as scores in U are already normalized)

L = t(U[, 1:3]) %*% cbind(X1, X2, X3)

rownames(L) <- paste0("GPC", 1:3) Table 2 upper part from Abdi & Valentin (2007), p.11

| fruity1 | woody1 | coffee1 | redfruit2 | roasted2 | vanillin2 | woody2 | fruity3 | butter3 | woody3 | |

|---|---|---|---|---|---|---|---|---|---|---|

| GPC1 | -0.97 | 0.98 | 0.92 | -0.89 | 0.96 | 0.95 | 0.97 | -0.59 | 0.95 | 0.99 |

| GPC2 | 0.22 | -0.15 | -0.06 | 0.39 | -0.01 | -0.20 | 0.10 | -0.81 | 0.19 | 0.00 |

| GPC3 | -0.02 | 0.02 | 0.37 | 0.21 | -0.28 | 0.00 | 0.14 | -0.01 | -0.24 | 0.11 |

The lower part of the table shows the correlations bewtween the eigenvectors of the standardized values of the studies (i.e. \(\mathbf{Z_1}, \dots, \mathbf{Z_3}\)). We have not yet computed them, only the projections into the global space (i.e. \(\mathbf{S_1}, \dots, \mathbf{S_3}\))

# compute factor scores for PCA of every study

d1 <- svd(Z1)

d2 <- svd(Z2)

d3 <- svd(Z3)

P1 = d1$u %*% diag(d1$d)

P2 = d2$u %*% diag(d2$d)

P3 = d3$u %*% diag(d3$d)

# compute loadings, i.e. correlations with global factor scores

L2 = cor(cbind(S[, 1:3], P1[, 1:2], P2[, 1:2], P3[, 1:2]))

L2 = L2[1:3, -(1:3)]

# as the multiplication of U with the singular values is a linear

# transormation that will be reverted when calculating a correlations,

# we may simply use the unscaled values of U directly instead of UD

tUZ = t(svd(Z)$u[, 1:3]) # overall unscaled values for GPC 1-3

L2 = tUZ %*% cbind(d1$u[, 1:2], d2$u[, 1:2], d3$u[, 1:2])

L2 = L2 * -1 # see note 2

rownames(L2) <- paste0("GPC", 1:3)

colnames(L2) <- paste0("Exp-", rep(1:3, each=2), "-PC", rep(1:2, 3))Table 2 lower part from Abdi & Valentin (2007), p.11 2

| Exp-1-PC1 | Exp-1-PC2 | Exp-2-PC1 | Exp-2-PC2 | Exp-3-PC1 | Exp-3-PC2 | |

|---|---|---|---|---|---|---|

| GPC1 | 0.98 | 0.08 | 0.99 | -0.16 | 0.94 | -0.35 |

| GPC2 | -0.15 | -0.28 | -0.13 | -0.76 | 0.35 | 0.94 |

| GPC3 | 0.14 | -0.84 | -0.09 | -0.58 | -0.05 | 0.01 |

The study PCs and the loadings of the variables on the global PCs are plotted.

par(mfrow=c(1,3), mar=c(0,0,1,3))

for (i in 1:3) {

plot(c(-1,1), c(-1,1), type="n", asp=1, frame.plot = F,

xaxt="n", yaxt="n", xlab="", ylab="", main=paste("Study", i))

draw.circle(0,0,1, lwd=2)

# add global PCs

segments(c(-1,0), c(0,-1), c(1,0), c(0,1), col="blue", lwd=2)

text(0, .8, "GPC1", col="blue", cex=.9, pos=3)

text(.8, 0, "GPC2", col="blue", cex=.9, pos=3)

# add variable loadings in global space

P = t(L[1:2, grep(i, colnames(L))])

points(P, pch=16, col="red", cex=1.3)

text(P, labels=rownames(P), cex=.9, pos=4, xpd=T)

# add study PC loadings

G = t(L2[1:2, grep(paste0("-", i), colnames(L2))])

points(G, pch=15, col="darkgreen", cex=1.3)

text(G, labels=paste0("PC", 1:2), cex=.9, pos=2, col="darkgreen")

}

Figure 3. Circle of correlations from from Abdi & Valentin (2007), p.12

Analyzing the between study structure

The partial inertias for each study is calculated. The partial inertia refers to the contribution to the vectors in \(V\) that span the basis of the space, that are attributed to each study. They are calculated as the sum of the squared projections of the variables on the right singular vectors of \(Z\). As the eigenvectors of \(V\) are normalized (i.e. the squared values add up to \(1\)), multiplying by the corresponding eigenvalue, the sum of the partial inertias for all the studies for a given dimension they will add up to the eigenvalue.

In = V^2 %*% D^2 # sum squared values for each study multiplied by eigenvalue

e <- rep(1:3, c(3,4,3)) # experts

colnames(In) <- paste("Axis", 1:6)

a <- by(In, e, colSums)

a <- do.call(rbind, a)

a <- rbind(a, colSums(a))[, -6] # remoce axis 6 as eigenvalue is zero

rownames(a) <- c(paste("Expert", 1:3), "Eigenvalues (Lamda)")Table 3. Partial inertias for the first three dimensions from Abdi & Valentin (2007), p.13.

| Axis 1 | Axis 2 | Axis 3 | Axis 4 | Axis 5 | |

|---|---|---|---|---|---|

| Expert 1 | 0.96 | 0.03 | 0.05 | 0.01 | 0.01 |

| Expert 2 | 0.98 | 0.06 | 0.04 | 0.02 | 0.00 |

| Expert 3 | 0.90 | 0.28 | 0.03 | 0.00 | 0.00 |

| Eigenvalues (Lamda) | 2.83 | 0.36 | 0.12 | 0.03 | 0.01 |

The figure below shows the partial inertias from each study and they contribution to each component.

par(mar=c(1,1,1,1))

plot(a[1:3,], xlim=c(0,1), ylim=c(0,1), pch=16, col="red",

frame.plot = F, xaxt="n", yaxt="n", cex=1.2, asp=1)

title(xlab="Dimension 1", ylab="Dimension 2", line = 0, col.lab="blue")

segments(c(0,0), c(0,0), c(1,0), c(0,1), col="blue", lwd=2)

text(a[1:3,], labels=1:3, pos=4, xpd=T)

Figure 4. Partial Inertia: Plot of the experts on the first two components. from from Abdi & Valentin (2007), p.14

Literature

Footnotes

Note however, that the term projection matrix is inaccurate, as the matrix is not idempotent, i.e. \(\mathbf{P}^2 \neq \mathbf{P}\), i.e. not a projection matrix in a strict sense.↩︎

Compared to Abdi & Valentin (2007) all signs of the loadings are switched. As the directions of a PC is arbitrary, this does not mean that the result are different. Still I corrected this by multiplying by \(-1\) for perfect congruence with the values from the paper.↩︎